Introduction to Paging

Right now, my laptop is running about 290 processes within a little over 8 GB of physical memory—and everything is solid as a rock. Have you ever wondered how all the processes on your computer manage to share the same physical memory without stepping on each other's toes and bringing the system down? I'm looking at you Classic Mac OS...

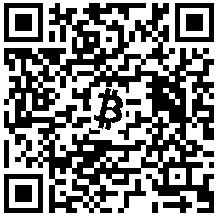

Thanks to modern paging units, it is now possible for the operating system kernel to provide each and every process with a virtual address space—making it appear to an individual process that it has "all the memory" to itself. For example, 32-bit systems are capable of addressing \(2^{32} = 4\) GB of physical memory. On such a system, each process would be placed into a "virtual world" with 4 GB of RAM that it doesn't have to share with other processes. This is achieved by partitioning the physical memory into small chunks called page-frames and mapping them into the virtual address spaces of the processes as necessary. The situation looks something like this:

This mapping is performed by the paging unit in a fashion that is transparent to the processes on the system. Individual processes access memory within their virtual address spaces using linear addresses. As you have probably guessed, the paging unit translates these linear addresses into physical addresses; thereby, providing the mapping from the virtual address spaces to physical address space.

Translating Linear Addresses

From here on, I'm going to use 32-bit Intel CPUs to base our discussion on—although, much of our discussion will be applicable to other architectures as well.

CPUs equipped with paging units only deal in linear addresses once the unit is enabled. This means that all addresses supplied as operands to assembly opcodes are linear addresses. It is the paging unit's job to convert these linear addresses into physical addresses before they are bussed to the memory controller, which in turn accesses the physical RAM.

A Brief Look at a Page Table Entry

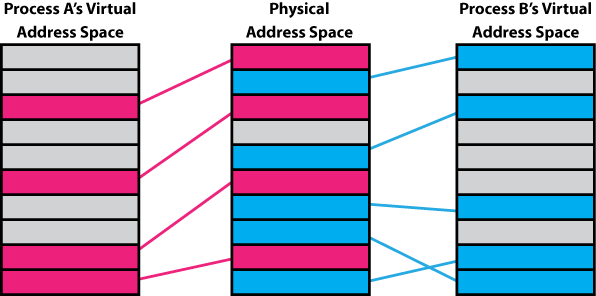

In order for the paging unit to perform the translation of linear addresses into physical address, it needs the assistance of the kernel. Specifically, the kernel needs to construct and maintain a set of page tables as well as a page directory for each process on the system—the kernel itself included! A page table is simply a collection of page table entries. Incidentally, so is a page directory. Both the page tables and the page directory are used to find the starting physical addresses of a particular page. Let's take a quick look at a page table entry:

We will look at the flag bits (0—11) in more depth in a future article, for

now just know that they exist. What we are really interested in at the

moment is the 20-bit Physical Base Address field. These 20-bits will

be used to form the 20 most significant bits of a physical address in

memory. Consequently, incrementing one of these base addresses by 1 jumps

ahead 4 KB in the physical address space. For example, if the 20-bits

where set to 0x7FC0B, this would correspond to physical address

0x7FC0B000. If we were to increment the 20-bit value by 1 from

0x7FC0B to 0x7FC0C, then this would correspond to the physical

address 0x7FC0C000. Notice how the increase by 1 moved us ahead 4 KB

in the physical address space from 0x7FC0B000 to 0x7FC0C000. Also

note how both of these addresses are on a 4 KB boundary. In other words,

both addresses are a multiple of 4096 bytes (\(2^{12}\) = 0x1000).

This is important because, recall, that the paging unit divides the

physical memory up into small chunks called page-frames. It just so

happens that page-frames on 32-bit Intel CPUs are 4 KB, which is why the

Base Address in the page table entries are 20-bits long! Incrementing

a base address by 1 advances to the next page-frame in the physical address

space. In the case of 4 KB pages, you can see that we are simply shifting

the base address 12-bits to the left to find the page-frame address—the

Linux kernel refers to this number of bits using the macro PAGE_SHIFT.

The Big Idea

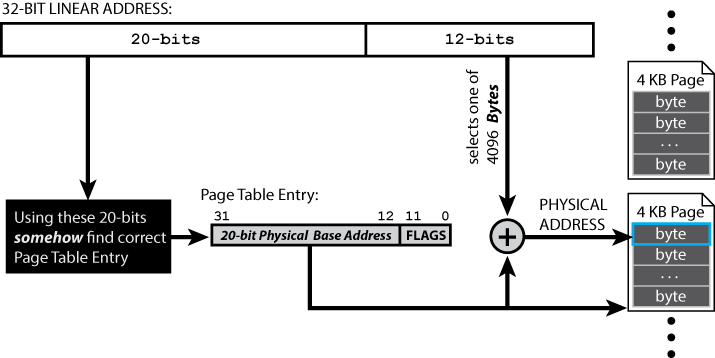

Let's not lose sight of the objective. We want to translate 32-bit linear addresses into 32-bit physical addresses. The big idea behind this translation is to first get the physical address of the page-frame containing the byte we want to access. We will do this by somehow finding the page table entry that corresponds to the desired page-frame. Next, we find the desired byte within that page-frame by looking at the 12 least significant bits of the linear address. (We need 12-bits because page-frames contain 4096 bytes and each byte is individually addressable.) The situation looks something like this:

Now the question is, "Given a linear address, how the heck do I use the higher order bits (i.e. the ones not being used to define the page offset) to find the page table entry I need?" Before we can answer that question, we need to talk a bit more about how the page table entries are organized in memory.

Enter the Page Table

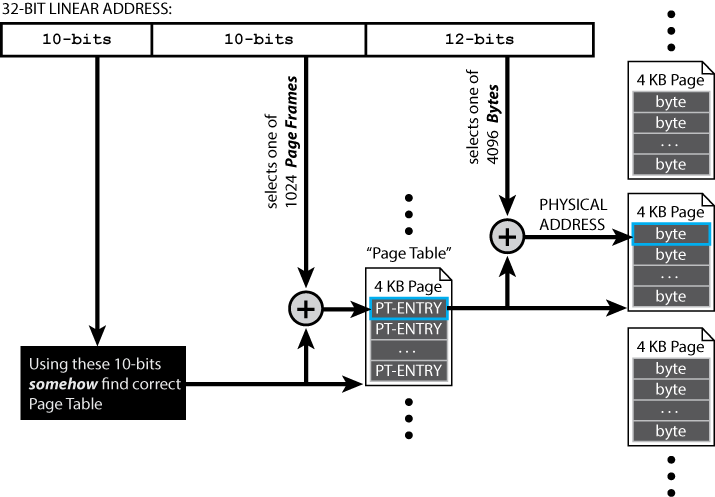

Recall that once the CPU enables paging, physical memory is divided into page-frames and linear addresses are used for everything! This means that if we are to store page table entries in memory, they themselves must be stored in pages!

So, for a system like the one we are discussing that uses 4 KB pages and 32-bit (i.e. 4 byte) page table entries, we can manage to stuff \(4096 / 4 = 1024\) page table entries into a single page. Now, we just need a way to select the page table entry we want within that page of 1024 entries. For this, we need 10-bits:

We call such a page filled with page table entries, simply, a page table. Each page table entry in a page table points to a 4 KB page-frame that contains actual user data. That means that a single page table, in this instance, can be used to manage \(4096 \times 1024 = 4\) MB of memory. Neat.

If we want to be able to address all 4 GB of physical memory, then we will obviously be needing multiple page tables! To solve this problem, we introduce yet another collection of page table entries to help us manage our many page tables. It is called...

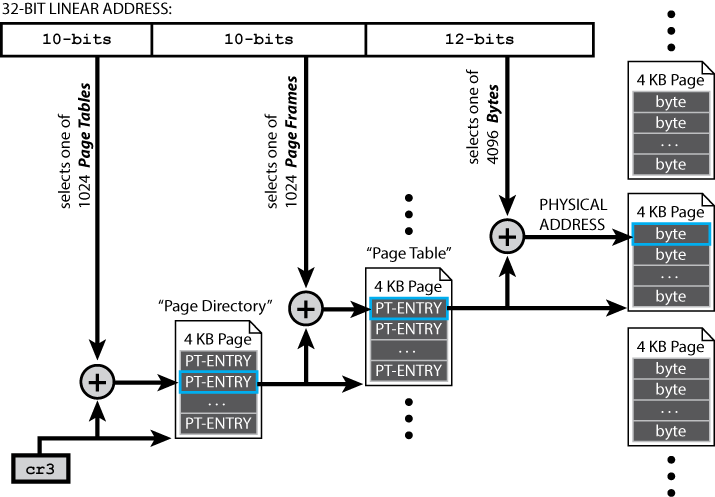

The Page Directory

In order to manage all 4 GB of physical memory, we will be needing 1024 page tables. Recall that a single page table fits entirely within a single page-frame. Yes, a page-frame! So, finding the page table containing the page table entry that points to our byte we want to access is just a matter of finding the page-frame containing the correct page table... whew! Now, if only we had a way of locating individual page-frames... oh wait, we totally do, and its called a page table entry.

Specifically, we will need 1024 page table entries to keep track of the

locations of our 1024 page tables in physical memory. Luckily, we can fit

all of these 1024 page table entries within a single page-frame. We call

that page-frame, the page directory and there is only one of them for

each process running on the system. When a process gets scheduled to a

CPU, the address of that process's page directory is shoved into CPU

control register 3 (cr3) so that it can be found by the paging unit.

Because the page directory contains 1024 page table entries, we will need

10-bits (\(2^{10} = 1024\)) to select the entry containing the base

address of the page table we need. The following diagram will hopefully

make all of this clear:

This act of partitioning the linear address and using the 1st 10-bits to find the correct entry in the page directory, the 2nd 10-bits to find the correct entry in the page table, and last 12-bits to find the correct byte within the page-frame is called the page walk. Incidentally, the page walk is unsurprisingly slow. So, once a linear address has been translated to the physical address of a page-frame using the page walk, the mapping is stored in a temporary caching mechanism called the translation look-aside buffer (more affectionately known as the TLB). Address translations for frequently/recently accessed pages are cached in the TLB to improve system performance. The number of entries that can be stored in a TLB is dependent on the architecture and can range from tens to thousands. Consequently, TLB entries are a valuable resource under constant contention. The situation is particularly dire for processes, such as databases, that do lots of work on large datasets spanning many many pages. For such situations, it may be beneficial to increase your page size.

Large Pages

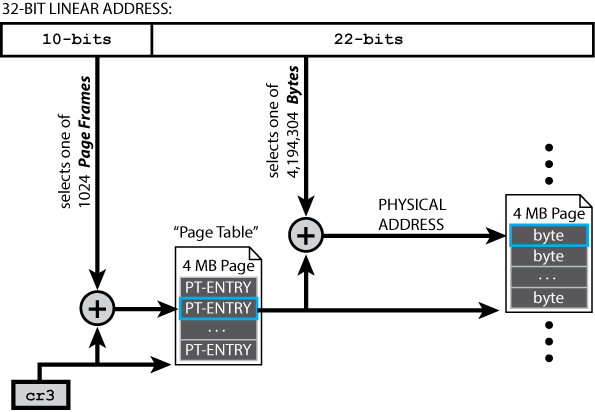

It is completely possible to work with page sizes other than 4 KB. The following diagram depicts a situation employing 4 MB pages:

A huge benefit to using these 4MB pages over 4 KB pages is that fewer TLB entries are needed to cache large contiguous memory regions that see frequent access. For example, if you have a process that does tons of work within a 4 MB array on a system using 4 KB pages, you are looking at roughly a thousand TLB entries. The same 4 MB array on a system using 4 MB pages would require only a single TLB entry! This of course, comes at the penalty of allocating large 4 MB pages in situations where only a few kilobytes were requested.

Now, the great thing about the 4 MB paging scheme depicted in the above diagram is that it is compatible with the 4 KB paging scheme we discussed earlier. If you squint and turn your head sideways, you can see that is is possible to plant entries in the page directory of the 4 KB scheme that point directly to jumbo 4 MB page-frames. All you need is a way to tell the paging unit that the entry it found in the page directory is for a 4 MB page and not a "normal" 4 KB page. Luckily, there is a size flag in a page table entry that can help you with that problem. This hybrid system capable of addressing both large and small pages is known more commonly as page size extension (PSE). We will save this, and other paging concepts relying on the mysterious flag bits, for future discussions.

In Closing

Here we talked about the basic ideas relating to virtual address spaces and how linear addresses are translated to physical addresses through a process known as the page walk. We discussed the fundamental role of page table entries as providers of page-frame offsets within the physical address space, and we showed how these page table entries are grouped together to form the page table and page directory data structures. Finally, we showed how linear addresses are partitioned and used to index into the page directory, page tables, and data pages. At this point you should be well on your way to better understanding how a modern computer provides insulation between the various running processes and how those processes are able to locate the information they are looking for within RAM.